Rohan Paul (@rohanpaul_ai)

2026-01-11 | ❤️ 390 | 🔁 56

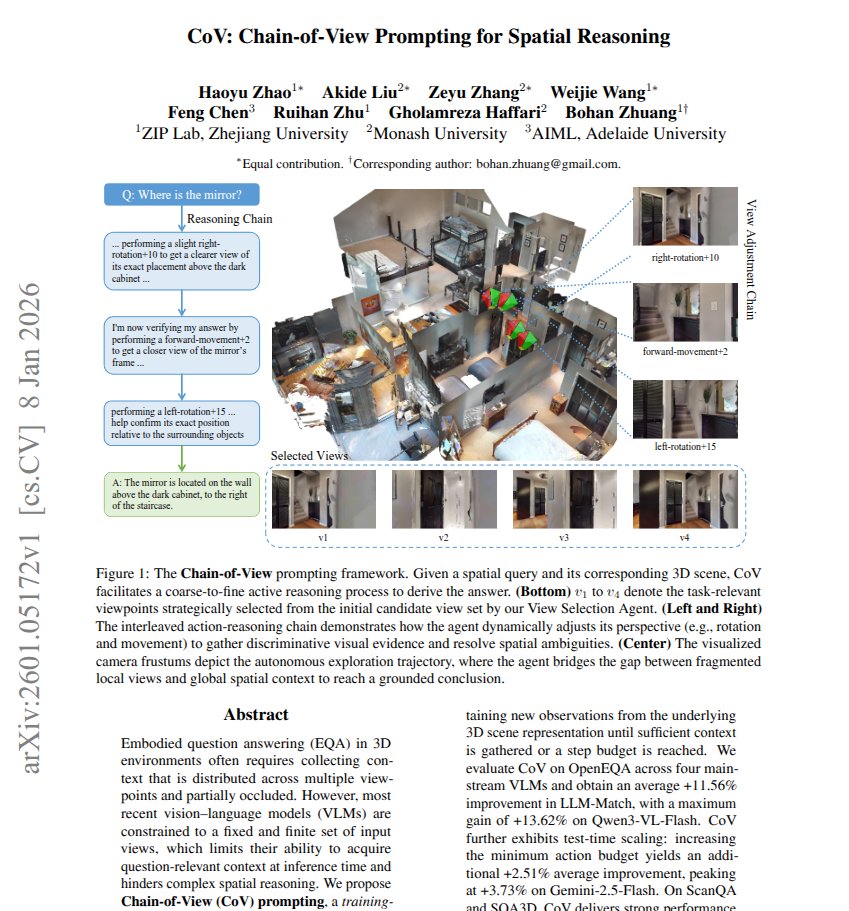

Chain-of-View makes vision language models move through a 3D scene before answering spatial questions.

Instead of fixed frames, the method takes a few camera moves and checks again before answering.

Its a training-free prompt that turns 3D question answering into think, move, look, then answer.

On OpenEQA, this add-on improves answer scores by about 11.56% on average, reaching up to 13.62%, without training.

Embodied question answering means answering a question by looking around a 3D room, and fixed camera frames often miss the needed clue.

Chain-of-View first picks a few promising starting views from the available frames, so the model begins near what the question is about.

It then repeats a loop at answer time, where it reasons, takes 1 small camera move or rotation in the 3D scene, and checks again.

The main takeaway is that actively gathering extra views can improve spatial answers while keeping the base model unchanged.

Paper Link – arxiv. org/abs/2601.05172

Paper Title: “CoV: Chain-of-View Prompting for Spatial Reasoning”

미디어

🔗 Related

Auto-generated - needs manual review