What if your robot or car could see depth more clearly than a top-tier RGB-D camera?

What if your robot or car could see depth more clearly than a top-tier RGB-D camera?

Researchers from Ant Group present LingBot-Depth.

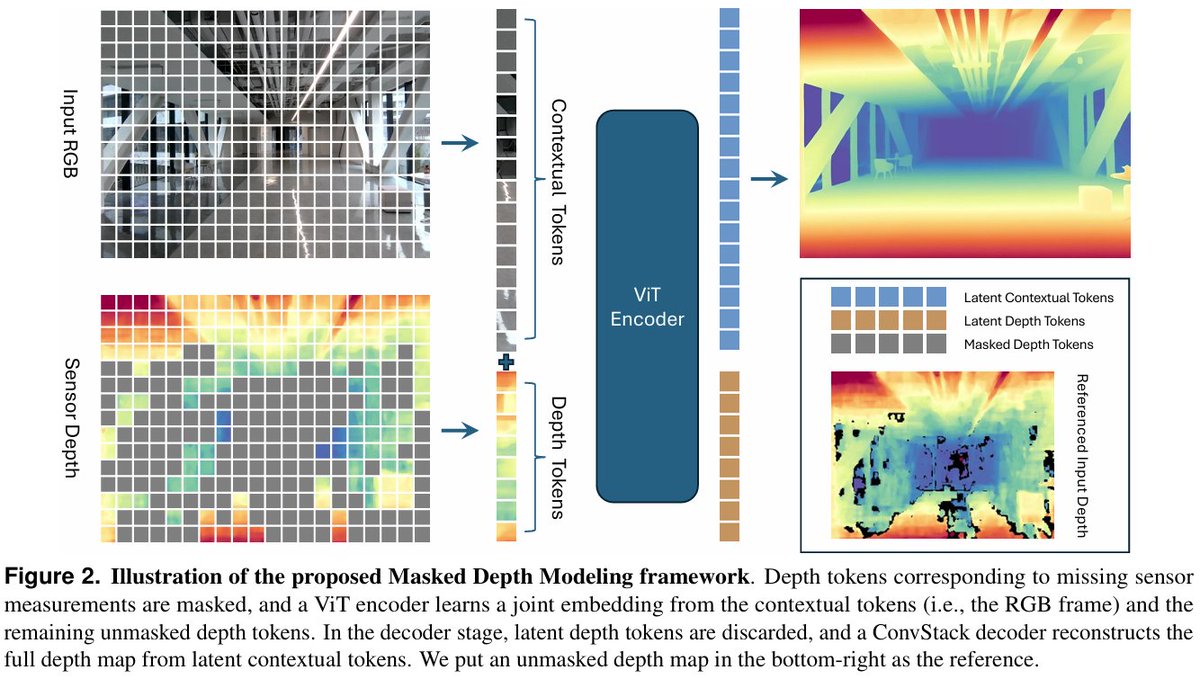

It treats sensor errors as “masked” clues, using visual context to intelligently fill in and refine incomplete depth maps.

It outperforms leading hardware in both precision and coverage, creating a unified understanding of color and depth for robotics and autonomous driving.

Masked Depth Modeling for Spatial Perception

Project: https://technology.robbyant.com/lingbot-depth HuggingFace: https://huggingface.co/robbyant/lingbot-depth Paper: https://github.com/Robbyant/lingbot-depth/blob/main/tech-report.pdf

Our report: https://mp.weixin.qq.com/s/_pTyp6hwmnLQUEeEzdp2Qw

🔗 원본 링크

- https://huggingface.co/robbyant/lingbot-depth

- https://github.com/Robbyant/lingbot-depth/blob/main/tech-report.pdf

- https://mp.weixin.qq.com/mp/wappoc_appmsgcaptcha?poc_token=HIEVh2mjOAPaU1gRLlIytwGdOxyZguegvBmLKF3O&target_url=https%3A%2F%2Fmp.weixin.qq.com%2Fs%2F_pTyp6hwmnLQUEeEzdp2Qw

미디어