Embodied AI Reading Notes (@EmbodiedAIRead)

2026-01-01 | ❤️ 112 | 🔁 21

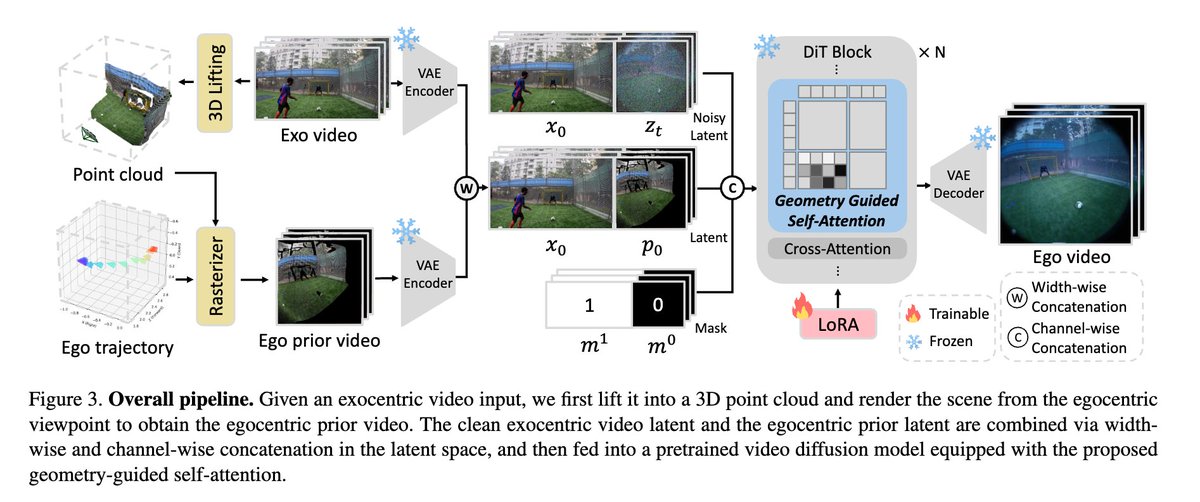

EgoX: Egocentric Video Generation from a Single Exocentric Video

Project: https://keh0t0.github.io/EgoX/ Paper: https://arxiv.org/pdf/2512.08269

This paper achieves high geometric coherence and visual fidelity when generating egocentric videos from a single exocentric video.

-

Why this is important: consistent egocentric videos open up a lot of new spaces for robot learning. If egocentric videos can be generated in high quality, diversity and quantity, it makes synthetic data a powerful complement to real-world data.

-

Problem definition: given an exocentric video sequence and ego-centric camera poses, goal is to generate a corresponding ego centric video sequence that depicts the same scene from first-person viewpoint.

-

Challenge: preserve the visible content in the exocentric view while synthesizing unseen regions in a geometrically consistent and realistic manner.

-

Method: (1) The exocentric sequence is first lifted into a 3D Point Cloud representation and rendered from the target egocentric viewpoint which becomes an egocentric prior video. (2) This prior video and original exocentric video are then provided as inputs to a LORA-adapted pretrained video diffusion model to generate egocentric video. (3) A geometric-guided self-attention in DiT is used to adaptively focus on view-consistent regions and enhance feature coherence across perspectives.

🔗 원본 링크

미디어

🔗 Related

Auto-generated - needs manual review