Xiaolong Wang (@xiaolonw)

2026-01-13 | ❤️ 199 | 🔁 24

After years of research, there is finally a solution to long context!

TTT-E2E is how robotics will work in the future. Humanoids ingest vision, touch, audio – almost everything humans do. It only makes sense if robot memory works like human memory: learning during deployment.

🔗 Related

Auto-generated - needs manual review

인용 트윗

Karan Dalal (@karansdalal)

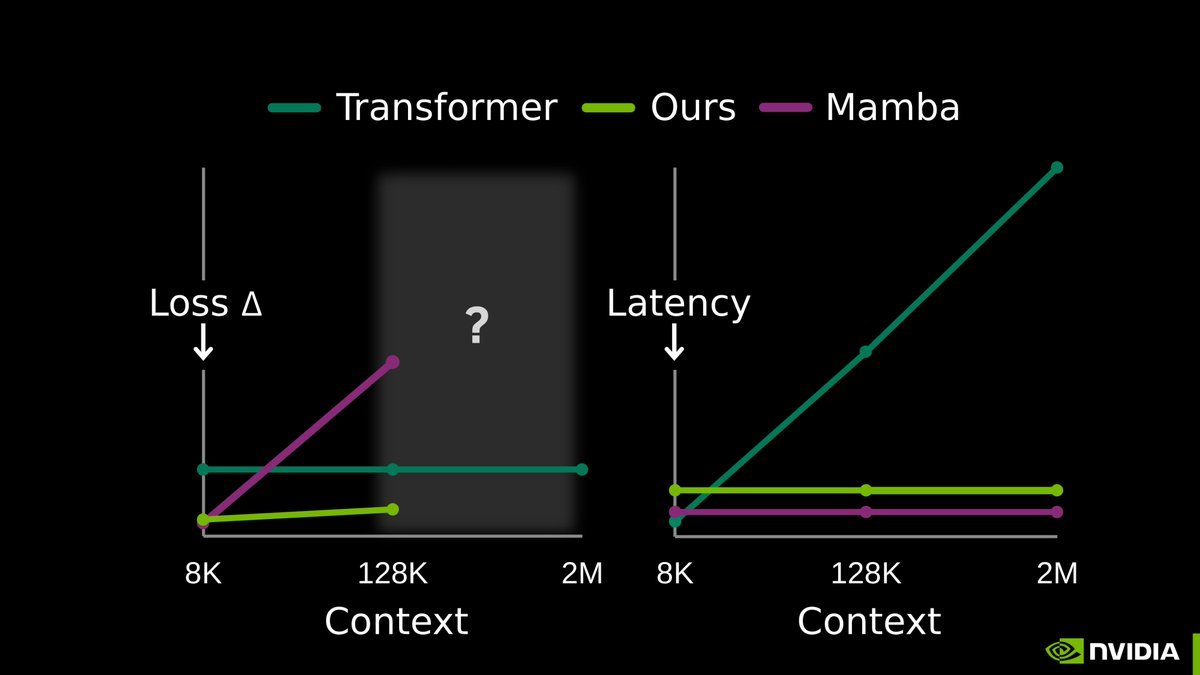

LLM memory is considered one of the hardest problems in AI.

All we have today are endless hacks and workarounds. But the root solution has always been right in front of us.

Next-token prediction is already an effective compressor. We don’t need a radical new architecture. The missing piece is to continue training the model at test-time, using context as training data.

Our full release of End-to-End Test-Time Training (TTT-E2E) with @NVIDIAAI, @AsteraInstitute, and @StanfordAILab is now available.

Blog: https://t.co/woCpiIrq0T Arxiv: https://t.co/3VkFlS3wx3

This has been over a year in the making with @arnuvtandon and an incredible team.