Yam Peleg (@Yampeleg)

2024-11-07 | ❤️ 1341 | 🔁 163

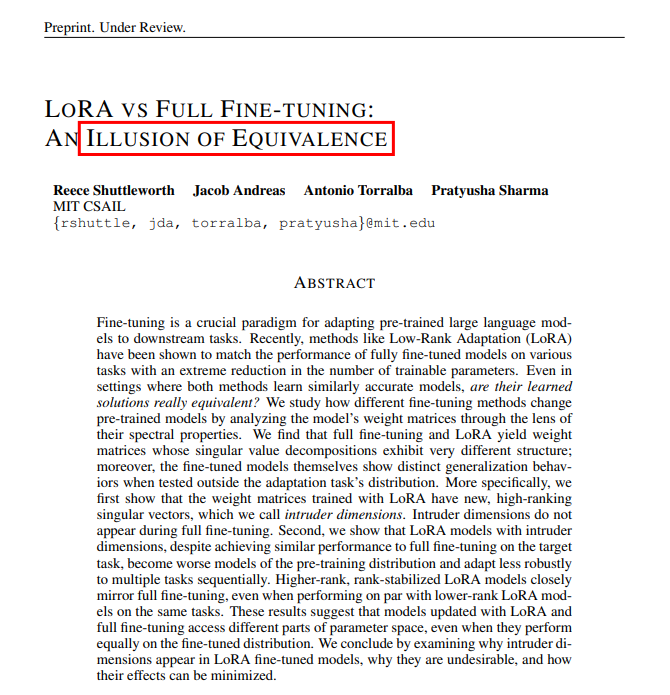

a very hot paper just dropped.. https://x.com/Yampeleg/status/1854608593737466221/photo/1

미디어

인용

인용 트윗

kalomaze (@kalomaze)

2024-11-07 | ❤️ 1341 | 🔁 163

a very hot paper just dropped.. https://x.com/Yampeleg/status/1854608593737466221/photo/1

kalomaze (@kalomaze)