Yuki (@y_m_asano)

2026-01-08 | ❤️ 107 | 🔁 10

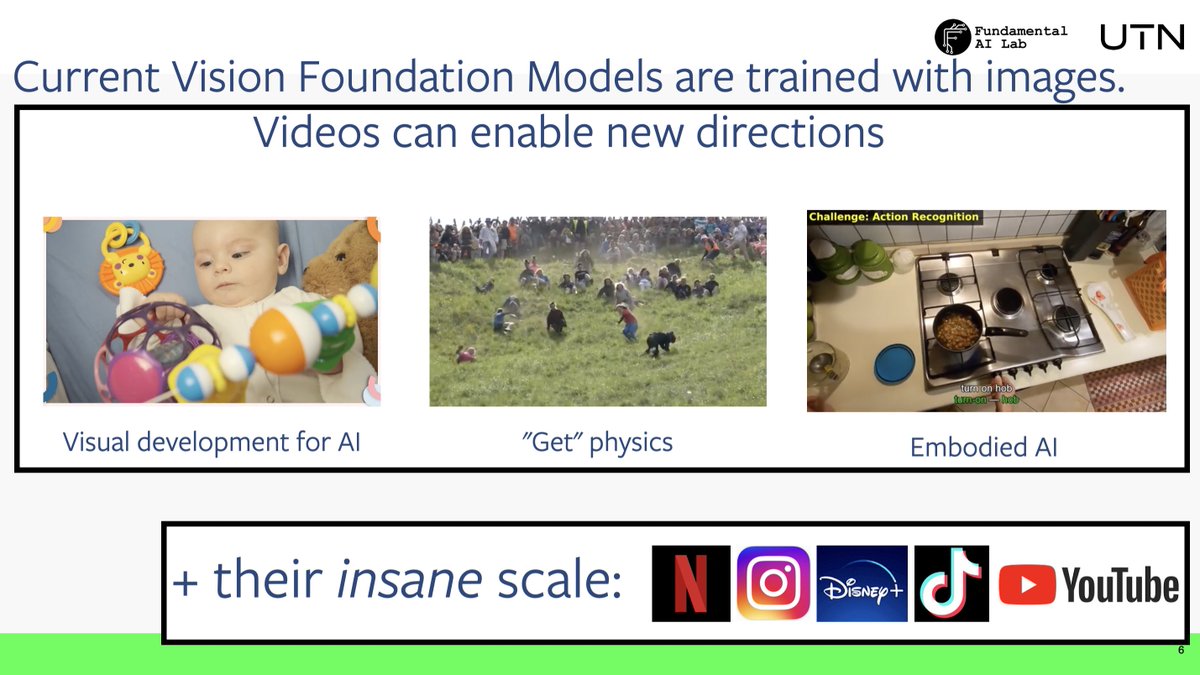

100% agree, and it’s the only area where I’m willing to bet: best vision models (images/videos/3d/4d) will come from large-scale video in the midterm (1-3years).

Videos + VGGT-style also enable other scalable learning pipelines, like for point clouds https://arxiv.org/abs/2512.23042 https://x.com/y_m_asano/status/2009188438835446078/photo/1

🔗 원본 링크

미디어

요약

향후 1~3년 내 최고의 비전 모델(이미지·비디오·3D·4D)은 대규모 비디오 학습에서 나올 가능성이 크다는 전망이다. 이미지도 참고하면 비디오는 물리 이해·Embodied AI로 확장되며, VGGT류 파이프라인을 통해 3D-Vision/dynamic/point-cloud 학습까지 스케일링할 수 있다는 요지다.

🔗 Related

Auto-generated - needs manual review

인용 트윗

Saining Xie (@sainingxie)

also putting on my scaling hat, I think we need a HUGE amount of long, complex video for pretty much anything we want to explore here. with images and image-based tasks, the regularities (and the levels of them) needed just aren’t enough to really guide us. curious about your thoughts